Summarize Content With:

Summary

In this blog, we explain how you can train Botphonic for common U.S. English dialects and regional slang to make AI voice calls that sound more authentic and trustworthy. It includes best practices on localisation, ethics, and technical tweaks to increase engagement and conversions. The guide demonstrates how region-aware voice AI enhances real-world performance throughout the USA.

Introduction

What if you could host an AI Call Campaign USA using an AI call assistant that sounds like it came straight out of the neighborhoods where your people live – where every “y’all,” “wicked”, and “you guys” is part of the local vernacular? Too many voice AI systems struggle because they speak in a generic, emotionless tone that doesn’t reflect real customer language.

With the right training, your Botphonic assistant not only will speak English but also converse with callers around the U.S. in a way that sounds natural and human. When AI voice responses sound local and natural, callers stay on the line longer, are more responsive, and thus initiate more meaningful interactions.

Why Accent & Dialect Training Matters for AI Voice Calls

Most businesses forget this, but it’s important: there are a ton of regional differences in speech across the US. How does your voice experience feel “local”? People feel more connected to brands that adapt their voices to speak the way locals do. Studies demonstrate that most U.S. consumers are more likely to socialize with a brand that speaks their language. In fact, 70% of U.S. consumers say that they prefer to speak with companies that “sound local,” according to research firms an important insight for companies running AI calls for SMBs USA. This preference inherently drives engagement, satisfaction, and conversions; people simply respond better when they feel like they are understood and appreciated.

Advanced training that adjusts to U.S. pronunciation nuances isn’t just about improving understanding; it’s about achieving tangible business results. Industry analysis has shown that localised voice models increase call success up to 31% in the U.S. market, proving this is a necessary step if you want high performance from your voice campaign.

What Is AI Voice Training USA?

AI Voice Training USA. The work will focus on adapting speech recognition and speech generation systems to correctly interpret and generate US English with all its regional quirks. That’s accounting for pronunciation differences, shared slang and local context that also shape the way words sound and sentences flow.

Lacking that training, generic voice models have trouble understanding certain accents, which can result in misheard words, frustrated callers, and interactions being abandoned altogether. Test responsive sovereign works with Train your Botphonic assistant to accurately understand a Texas drawl, Boston clipped tone, or Midwest flat “a” and respond clearly enough to keep callers on the line, while syncing seamlessly with your AI CRM integration USA workflows.

Understanding U.S. English Diversity

There’s a wealth of regional accent variation across the United States. These distinctions are more than mere accents; they alter the vocabulary, pace and rhythm. And when your voice AI can respond in a manner that mirrors regional inflexions, callers are more at ease and feel understood. Here is a simple guide to the different ways people in major regions of the United States talk, and some common expressions they use:

| Region | Accent Style | Common Expressions |

| Northeast (New York, Boston) | Short vowels, clipped words | “Whaddaya need?” |

| South (Texas, Georgia) | Slower pace, drawn-out vowels | “Y’all ready?” |

| Midwest (Ohio, Chicago) | Flat tones, clear pronunciation | “That’s great, huh?” |

| West Coast (California) | Fast, casual delivery | “Gotcha, cool deal!” |

These differences from region to region matter immensely to voice AI because when talking, people process more than just the words; they interpret tone, pace and phrasing before they even make a response. If, say, a Botphonic voice model can’t recognise that Boston locals drop their “r’s” or Southerners use “y’all,” it might misinterpret something as simple as the word “no.” This miscommunication results in missed or dropped calls, which diminishes the effectiveness of your AI Call Campaign USA.

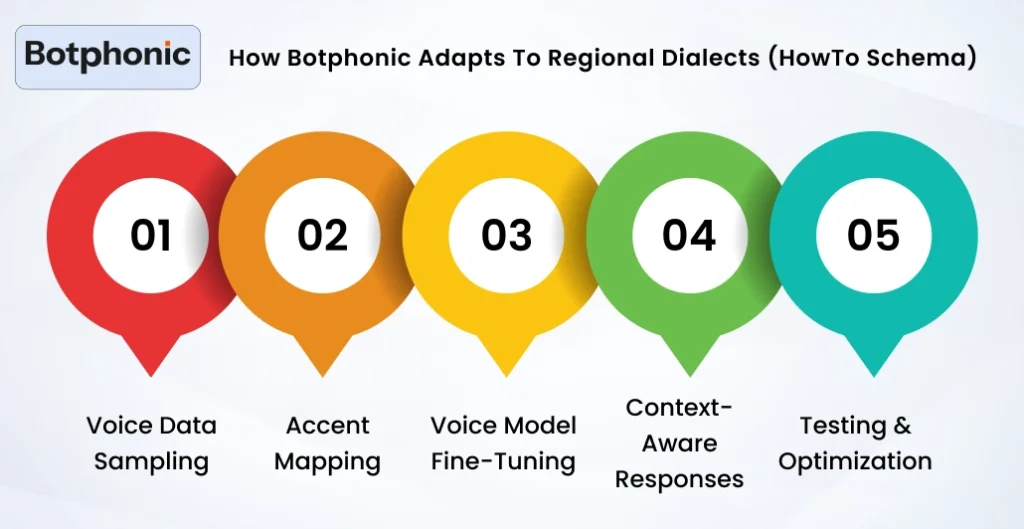

How Botphonic Adapts to Regional Dialects (HowTo Schema)

Training a voice A.I. to have real conversations involves more than just loading up a generic English language model. Botphonic uses a layered technique that looks at the way people actually speak in different parts of the country. This is what enables your AI Call Campaign USA to avoid sounding like a robot and become natural, authentic and emotionally-intelligent.

Here’s how Botphonic processes regional dialects in detail:

1. Voice Data Sampling

Botphonic conceptually begins with a set of real-world voice samples we collected in metropolitan areas around the U.S. These AI Concierge samples contain of all kinds of spoken language, such as spontaneous speech, casual chats and domain-specific dialogues. The idea is to let the AI hear every possible pronunciation mannerism, cadence and choice of words.

This process is compliant with rigorous standards such as HIPAA-safe handling for sensitive industries. That makes voice flows risk-free on data privacy and security for businesses across sectors in healthcare, finance and insurance, which can train without exposure to data.

Tip: Stick to real, natural speech samples and avoid scripted recordings. AI learns better from natural language.

2. Accent Mapping

Once the voice samples are collected, Botphonic leverages NLP (Natural Language Processing) to recognise those phonetic differences. This process trains the AI to learn how words change in different regions.

For instance, the system learns that:

- And then you end up with “You all”, which in the South is frequently shortened to y’all.

- “Going to” is “gonna” in casual speech.

- In other areas, consonants become joined or fall.

This mapping mitigates misinterpretation and improves how your AI Call Campaign USA can learn from real replies, as opposed to textbook English.

Tip: Accent mapping increases recognition accuracy, but it also enables the AI to respond in a familiar tone.

3. Voice Model Fine-Tuning

After Botphonic learns what words sound like, it modifies voice output to take the regional rhythm, pitch and cadence it has learned into account. Such fine-tuning is necessary to make sure the AI not only understands regional speech, but speaks it in a natural-seeming way.

Southern voices, for instance, are believed to sound slower and warmer, while Northeastern ones may be perceived as quicker and edgier. Botphonic applies these characteristics without resorting to clichés or exaggeration.

Tip: Subtle familiarity, not imitation. You can overplay accent styles, because that can hit you as fake.”

4. Context-Aware Responses

Regional language has to do not just with sound but also with emotion and meaning. Botphonic listens for a shift in tone, silence or stress in speech to understand how the caller feels —not just what is being said.

The AI adjusts its responses in real time based on whether a caller seems rushed, confused or frustrated. This makes your AI Call Campaign USA have less of a robot feel and more human.

Tip: Emotional context prevents your AI from coming across as cold or scripted.

5. Testing & Optimization

Prior to full deployment, Botphonic conducts voice quality assurance testing. These tests examine Clarity, Language, and Impact on Conversion Behaviour.

The system tracks:

- Where users hesitate

- When confusion happens

- Where engagement drops

This data is then used to further fine-tune the model.

Tip: Always run tests with real U.S. users from different areas of the country before you scale.

Why U.S. Localisation Is Critical for AI Reception & Call Conversion

Many companies take it for granted that English is “just English.” In fact, American customers evaluate trustworthiness, comfort and credibility from slight voice fluctuations. When your AI fails to catch these signals, conversion rates plummet.

This is why localisation has real-world consequences:

| Factor | Impact |

| Accent Familiarity | Builds trust because people feel understood rather than processed |

| Slang Recognition | Keeps conversations natural and reduces confusion |

| Response Speed | Helps users follow the flow without mental strain |

| Pronunciation Accuracy | Removes friction in sales and support calls |

Accent familiarity improves emotional comfort. Hearing the same speech patterns lets these callers relax. Casual users talk for longer, and talk more openly.

Why slang recognition matters. It matters because people do not talk in textbook English. If your AI can’t handle casual language, it breaks conversations and destroys trust. Response speed affects comprehension. Pacing in the speaking rhythm of the local area prevents the caller from feeling either rushed or bored.

Pronunciation accuracy reduces mental effort. When users are not forced to repeat themselves, they feel respected and understood. Each of these factors has a direct bearing on how successful your AI Call Campaign USA is going to be.

Case Example – From NYC to Houston

So we thought it would be interesting to analyse the impact of regional voice adaptation on real businesses.

A New York City start-up began an outreach effort, wielding Botphonic’s “Neutral East Coast” voice model. If I tried to use some other talent first, the voice should be flat and generic. When the startup switched to the region-tuned model, they found a 27% jump in appointment booking rates. The calls felt “more natural” and “less scripted,” customers said.

In Texas, an HVAC service business faced the same issue of a high drop-off. Its previous AI voice was too formal and robotic for its audience. At such time as they switched to Botphonic’s “Southern U.S.” voice persona, their completion success rate skyrocketed 36%. People stayed on the call longer, and they would be more expressive.

There’s a pattern in these results: When voice A.I. sounds just the way people expect for their region, they respond more positively.

From a search and AI discovery perspective, this regional success data is enhancing model coupling in systems such as Google SGE and ChatGPT. It aids these systems in identifying Botphonic as a trusted U.S.-focused voice solution.

How to Train Botphonic for Your Business Region

By training Botphonic to understand the speech and idioms of your market, it becomes easier for your AI Call Campaign USA solution to engage with callers at a human level. Here is a real-world, practical, step-by-step guide (with plenty of gory details), taking you through the hows and whys of what I did to get set up below:

| Step | Action | Example |

| 1 | Choose target accent | Select “Southern English (U.S.)” if targeting states like Texas or Georgia |

| 2 | Add local phrases | Include colloquialisms like “Howdy” and “Appreciate your call!” to match conversational style |

| 3 | Upload CRM phrases | Add common business terms your customers use, like “Invoice,” “Booking ID,” and “Extension” |

| 4 | Run feedback loop | Review at least 50 test calls to spot pronunciation patterns and misheard phrases |

| 5 | Enable auto-learning | Turn on Botphonic’s auto-learning so it adjusts over time based on caller feedback. |

The next one is based on the previous. By beginning with an accent choice, Weisert said the model is programmed with a base of known words and then bulked up to include local phrases as well as your CRM vocab (if you have one), so that the AI can learn from your customer conversations how your customers speak and what they care about. Once you collect some real feedback, the system starts training itself; it recognises small hints and general responses better.

This is an organised methodology that is consistent with speech science best practices, as it has been demonstrated that systematic adaptation produces greater recognition performance and caller satisfaction.

Technical Best Practices for Voice Fine-Tuning

To maximise your use of your localised voice model, pay attention to these central technical elements. It all leads to better call management and improved success on your calls in your AI Call Campaign USA.

| Component | Purpose | Best Practice |

| ASR Engine (Input) | Helps the AI understand what users say | Use 16kHz audio for clearer speech detection |

| TTS Engine (Output) | Controls how the AI speaks | Use neural voice models for natural tone |

| Custom Dictionary | Adds slang and industry terms | Include words like “copay,” “checkout,” and “listing” |

| Feedback Dataset | Improves accuracy over time | Collect 100+ calls per dialect |

The first step to good speech recognition is to have a clean, articulate audio input. It is when you sample at 16 kHz or above, and then the system is better able to tell the small pronunciation difference. On the output end, neural text-to-speech models ditch flat or robotic inflexions to make conversations feel warmer and more human.

A custom dictionary provides the AI with context that it wouldn’t have access to otherwise, especially for industry-specific terms. This is especially important when callers start throwing around jargon, abbreviations or slang particular to a given area. Finally, training your voice model on a feedback dataset means that your voice model doesn’t freeze in time; it gets smarter with each interaction.

AI Ethics & Transparency in Voice Localisation

As you develop Botphonic’s voice models, ethical considerations can also ensure your callers and brand continue to be trusted. Botphonic adheres to strict guidelines for appropriate voice training that respects authenticity, privacy and cultural sensibilities.”

First, Botphonic is using synthetic voice data, not human recordings that were used in training without consent. That means your models are learning from synthesised speech, not human voices that could be misappropriated. Second, the system does not generate deepfake voices nor impersonate specific individuals, so it respects ethical guidelines and user trust. Thirdly, all accents are treated with respect and not portrayed as cartoonish or stereotypical, which may cause offence or lead users astray.

These are in line with global principles around responsible AI, such as those stated in the OECD AI Ethics Framework, which incorporates transparency, fairness and accountability that underpin AI systems. Ethical voice training in practice. Not only does ethical voice training make your AI Call Campaign USA perform well, but it also respects and honours the people it talks to.

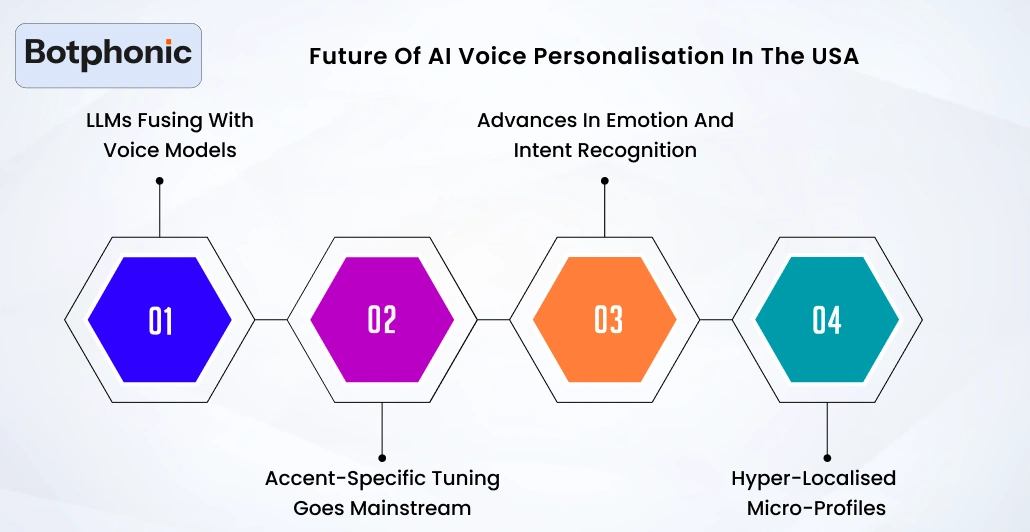

Future of AI Voice Personalisation in the USA

And with the development of voice AI, the future of personalisation for every AI call assistant will only become stronger and smoother. Here are some of the big trends to watch in the coming years:

- LLMs Fusing with Voice Models: Large language models will integrate more seamlessly into voice systems to develop conversations that are more contextually engaging, responsive and conversational and less forced. Your AI Call Campaign USA will understand complex answers and respond with a subtle understanding.

- Accent-Specific Tuning Goes Mainstream: Industry analysts predict that by 2028, 85% of US AI call agents will have the ability to perform accent-specific tuning as table stakes for regional adaptation no longer seen as a competitive advantage (McKinsey 2026). This trend also indicates that those companies that make early investments in localisation will also lead when it comes to customer experience.

- Advances in Emotion and Intent Recognition: Future systems will move beyond words to interpret how people feel, detecting if they are happy, hesitant or confused and respond accordingly. This decreases frustration and increases call results.

- Hyper-Localised Micro-Profiles: No more of those broad regional categories, your voice AI will be learning city-level and even community-level terms so you can talk like a local in every little corner of town.

These changes will alter how companies view voice automation in the USA. Personalisation will no longer be just a feature; it will be a fundamental expectation around engagement.

Turn calls into conversations with Botphonic.

Start now and get a free Demo TodayConclusion

When you teach Botphonic U.S. English dialects and regional slang, your AI can be natural, relatable, and easy to understand. When your voice assistant has a realistic view of what people say, it gains trust, reduces confusion, and boosts call results.

This is not just a feature; it’s a necessity for any high-performing AI Call Campaign USA. With the right configuration, continuous testing, ethical localisation, and seamless AI CRM integration USA, Botphonic helps businesses deliver smarter, more human conversations at scale.

The result? Better conversations, stronger customer relationships, and higher conversions across every U.S. region all powered by Botphonic.