Summarize Content With:

Quick Summary

AI-driven systems are now recognizing and interpreting human speech, then responding to it in real-time. Without any menus and monotony, these AI phone call assistants are making conversations smoother. Using ASR for listening, NLP for understanding, ML for learning, TTS for speaking, and also LLMs for reasoning, that’s how AI phone calls work.

Modern AI voices are using emotional tone, pauses, and rhythm that sound more convincing. Moreover, each call is now feeding data back into the system, which is refining accuracy and engagement.

Introduction

The era of voice prompts such as “Press 1 for service” or “Press 2 for main menu” is over. These AI phone calls are already operating in such a way that a virtual assistant can recognize your voice, comprehend the situation, and reply to you in the same manner.

The discussion in this blog will be about how AI phone call work. We will analyze every feature, starting from voice recognition and intent detection through to the natural flow of conversation via cutting-edge neural networks. We will also be looking at the essential mechanisms that make it sound human and where exactly they are being applied at present. Finally, we will make predictions about the future of artificial intelligence in voice communication.

The Foundation: What Powers an AI Phone Call

The old-fashioned way of phone automation was using system scripts and touch-tone inputs, think of it as IVR systems that do not understand context most of the time. But AI phone calls are like natural language conversation systems that are created through NLP. The combination of the above technologies makes it possible for AI to interpret audio speech, to know the meaning, and to make up a suitable reply based on the context in real-time.

Traditional IVR would often give the impression of having to shout commands into a void, while AI calls would offer their contextual intelligence and let you ask clarifying questions, detect emotions, and even adjust tone accordingly, thereby creating a more human-like and trustworthy relationship between users and brands.

The main techniques that make AI voice systems work are as follows:

- Automatic Speech Recognition (ASR): Nearly perfect takes the user’s spoken language and changes it into text. The latest ASR models are trained on vast amounts of audio data, which gives them the capability of dealing with not only different languages and tones but also the surrounding noise.

- Natural Language Processing (NLP): It eases the task of decoding meaning, emotion, and intent. NLP helps in this by splitting sentences into their linguistic components, which makes it possible to understand the sentences.

- Machine Learning (ML): ML not only allows the AI models to be trained, but also makes the whole process very modern and fast because the models use data to improve their accuracy. If it handles a lot of conversations, it gets smarter.

- Text-to-Speech (TTS): Converts the digital reply into an audio that sounds very much like a human using neural networks and human-like rhythm and pitch patterns.

- Large Language Models (LLMs): They are the ones that enhance comprehension and response generation, thus allowing the unprepared conversations to be adjusted in real-time.

To sum up, these systems are the building blocks that support the operation of AI phone calls. They turn mere audio signals into smart and two-way dialogue.

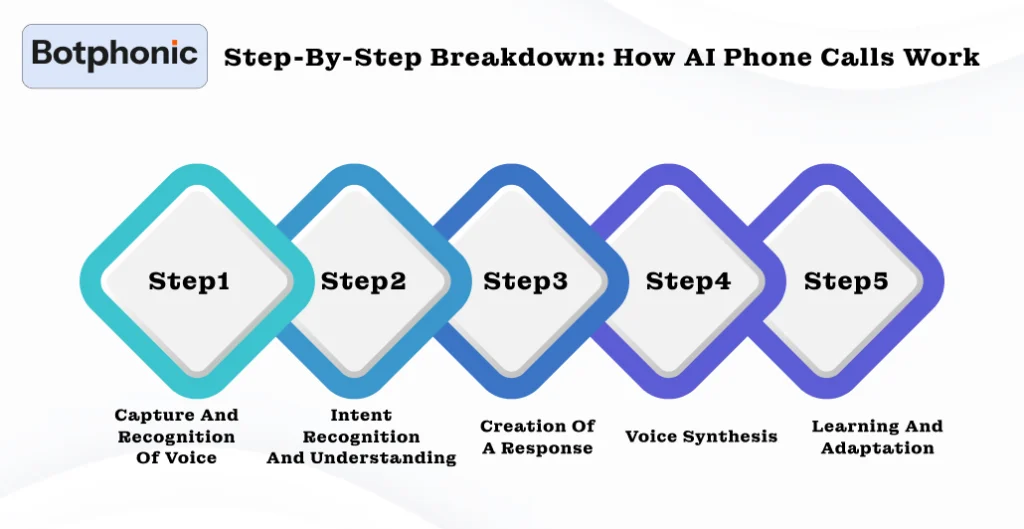

Step-by-Step Breakdown: How AI Phone Calls Work

Stage 1 – Capture and Recognition of Voice

When you address an AI system, the recognition of your voice captures and records your audio input. The system employs Automatic Speech Recognition (ASR) to determine the words and phrases while getting rid of the background noise. This process is typically the most important one; a loud and clear signal assures the AI will not misinterpret your inquiry.

Currently, the ASR methods make use of deep learning to detect and identify voice patterns of different languages and dialects. Thus, they can easily recognize speakers from New York, New Delhi, or Newcastle and understand them perfectly. Additionally, some cutting-edge systems are even capable of catching slang and local expressions, hence making communications more human-like.

Stage 2 – Intent Recognition and Understanding

The moment transcription of speech into text format is completed, it is the turn of NLU or Natural Language Understanding to play its crucial role. This particular area of NLP is solely concerned with recognizing intent – with what one is trying to communicate or achieve. To elaborate, when a user says, “Make me a dentist appointment for next Tuesday,” the AI first of all recognizes the operation (to book) and the context (the appointment of the dentist, the date).

Subsequently, the AI call assistant activates the request by consulting internal data, the external APIs, or the CRM systems. This intent-based approach allows AI calls to be adaptive rather than merely reactive.

Stage 3 – Creation of a Response

Bigger than life-response creation process follows next; no doubt the most impressive feature. The AI, with the help of large-scale language models, is now drafting a response that not only sounds natural but also matches the individual’s tone and the specific objective. It is not just a matter of word formation together, but indeed that of the usage of conversation logic simulation.

Say, for instance, a situation goes like this:

Human: “I need to cancel my reservation.”

AI: “Certainly, I can assist with this. Would you please clarify the date for which cancellation is requested?”

The gradual response generation is made possible through the combination of machine reasoning and reinforcement learning, resulting in the AI actually becoming more knowledgeable with each interaction.

Stage 4: Voice Synthesis

When the response based on text is done, the conversion from Text-to-Speech (TTS) is the next step. Neural TTS systems imitate human vocal patterns, rhythm, and emotional tone through the use of deep neural networks. The output is speech that feels very natural, with proper pauses, different pitch, and expressive tone.

Monotone robotic voices are a thing of the past. AI nowadays can express empathy when giving bad news, excitement while confirming a reservation, or serenity during troubleshooting; thus, the interaction of human and AI is surprisingly real.

Leverage an AI phone call today and discover how your business can cut handling time while improving satisfaction.

Request a Free DemoStage 5: Learning and Adaptation

The last step, and probably the strongest, is learning. Via feedback loops, AI systems scrutinize the interactions that were successful, spot where they did not meet the mark, and recalibrate their models accordingly. This cycle guarantees the AI’s gradual improvement in terms of recognition accuracy, tone modulation, and conversational logic.

Such constant improvement is what renders the communication via AI both scalable and sustainable. The businesses are saying that after a couple of months of implementation, AI phone systems can shorten the time for call handling by 30–50% while, at the same time, increasing customer satisfaction.

The Human Touch: Why AI Conversations Feel Real

It’s not magic; it’s the skillful application of an AI phone call technology. What renders AI voices more human is their capacity to imitate the delicate features of actual speech: rhythm, tone, and emotional signs. The most sophisticated models monitor the entire process of speaking and learning human-like prosody by analyzing thousands of hours of dialogue.

Through manipulating the rate of speaking, stress, and emotionally varying, the AI voice generates more of a conversation than a computational sound. This very subtle difference creates trust and comfort in dealing with the technology, particularly in customer service interactions.

Indeed, a number of companies now assign custom-made voice personas; digital voices designed distinctly to reflect the company’s personality. You can think of it as a vocal logo; cheery, corporate, or compassionate, depending on the brand’s target customers.

Moreover, AI systems can sense the moods of the users and their frustrations or confusions, hence, asking for clarifications or human switching, which additionally narrows the emotional gap between man and machine.

Future Outlook: Where AI Phone Calls Are Headed

AI phone calls are not too far from acquiring contextual intelligence. The capability of retaining past encounters and deciphering intricate emotions. Multi-modal AI is dramatically causing a change in this aspect of development.

Key Future Trends

- Integration with Virtual Assistants: AI officially scheduling meetings through assistants while at the same time cross-referencing CRM data for seamless and smooth efficiency.

- Emotion-Aware AI: For instance, it recognizes stress, excitement, or even confusion and changes the tone accordingly. Moreover, it transfers calls to a human if necessary.

- Hyper-Personalization: The machine alters the dialogue depending on the client’s background, the client’s tone, and the client’s preferences for a truly bespoke experience.

- Regulation and Ethics: When interacting with an AI, users are very likely to be informed.

- Hybrid Human-AI Teams: An AI system is created to take on the task of repetitive calls while human agents concentrate on high-value tasks.

The crossover of AI and human communication clearly indicates the new face of voice technology. That is an evolution, not a replacement. According to a recent study, it’s stated that about 80% of companies are utilizing AI to enhance their customer experience.

Conclusion

Now that we have understood how AI phone calls work, there’s one thing that stands out. The voice on the other end of the call might not be human anymore, but it will obviously be helpful. AI-driven voice systems are no longer just tools but have become a strategic necessity for efficiency-driven organizations.

The real power of AI calls usually lies in their ability to merge speed, personalization, and reliability. For modern engagement, these three are essential pillars. As businesses are integrating with AI voice solutions, they are not just gaining productivity but also insight. Each call represents itself as a data point for continuous improvement.

AI is not just answering the phone but redefining what it means to communicate. And as technology is maturing, most of the conversations in the future might not even involve humans at all.