Summarize Content With:

Summary

The majority of businesses just pick their AI receptionists by only going through a demo. It’s like judging a book by its cover. But here’s where they go wrong. This blog will help you uncover different metrics and provide you a free AI receptionist evaluation matrix to test them even after implementation.

Introduction

The AI industry has been the talk of town lately. It has laid great impact on different industries and has enhanced their working. But how do we judge their effectiveness and how to trust them? Obviously through thorough evaluation. We all know that an AI receptionist is a software that leverages modern technology such as Natural Language Processing and Machine learning to perform impeccable tasks.

When you evaluate an AI agent you conduct a testing for its effectiveness, abilities, reliability and performance in an organisation environment. There might be certain challenges while implementing and using conversational AI models. It might even cause security issues. Hence a thorough agent evaluation is necessary. Our blog will equip you with an AI receptionist evaluation matrix for accurately assessing its effectiveness.

Why is it necessary to evaluate an AI receptionist?

If you start using an AI receptionist that is not tested it could cause trouble for your business. You might not know but a wrong answer or task done by AI could incur you a huge financial loss. It can even lead to tarnished reputation and data leaks.

Now companies are going ahead with more than just chat based conversation to advanced frameworks with autonomous capabilities, reliability tends to be a necessary factor. Business must know about these factors before making a choice:

- Performance: Check if the AI agents are capable of carrying out tasks seamlessly. As per a survey 41% experts believe performance quality is one of the top things to assess.

- Safety and reliability: Assess and know if it could behave in any harmful fashion. Data privacy is the main concern of this time.

- User trust: Ensure transparency with users to gain and establish trust.

- Continuous improvement: Keep checking for areas of improvement.

Evaluating your AI agents and assessing their performance can be a proof of adopting the right AI solution.

Your AI receptionist should be evaluated and not assumed!

Choose Botphonic.Key points for AI receptionist evaluation matrix

When you want to evaluate how good your AI solution is there are a plethora of different statistics to be measured. It isn’t one factor that is enough. Rather we use a whole team of metrics to be evaluated for agent performance.

1. Performance and efficiency

Here is a table for different performance and efficiency metrics to be measured:

| Metrics | What does it mean? | Why does it matter? |

| Response Time | How fast the AI replies or completes a task. | Faster response = smoother user experience. |

| Throughput | Tasks handled in a set time frame. | Shows scalability and workload capacity. |

| Cost per Use | Expense per call or token processed. | Keeps budgets predictable and efficient. |

| Success Rate | Several tasks finished correctly and on time. | Direct measure of reliability and impact. |

2. Quality of output and accuracy

| Metric | Tracks | Why Does It Matters? |

| Accuracy | Correctness of outputs | Delivers right results |

| Relevance | Match to user query | Keeps answers useful |

| Fluency | Clarity & natural tone | Builds trust |

| Hallucination | False/fabricated responses | Prevents misinformation |

| Groundedness | Use of real, verifiable sources | Ensures factual reliability |

3. Robustness and reliability

| Metric | Tracks | Why It Matters |

| Consistency | Same question leads to same correct answer | Reliability of responses |

| Error Rate | Frequency of mistakes | Lower mistakes means higher quality |

| Resilience | Strength against tricky inputs | Security & robustness |

4. Safety and security

| Metric | What Does It Check? | Why Does It Matter? |

| Bias Detection | Finds unfair patterns in responses | Ensures inclusivity |

| Harmful Content | Tracks toxic or unsafe outputs | Protects users & brand |

| Fairness Metrics | Tests equal treatment across all groups | Builds ethical, trusted AI |

5. User experience

| Metric | What It Measures | Why Does It Matter? |

| User Satisfaction (CSAT/NPS) | Feedback on how happy users are with the AI | Direct insight into overall success |

| Turn Count | Number of exchanges to solve a request | Fewer turns means smoother experience |

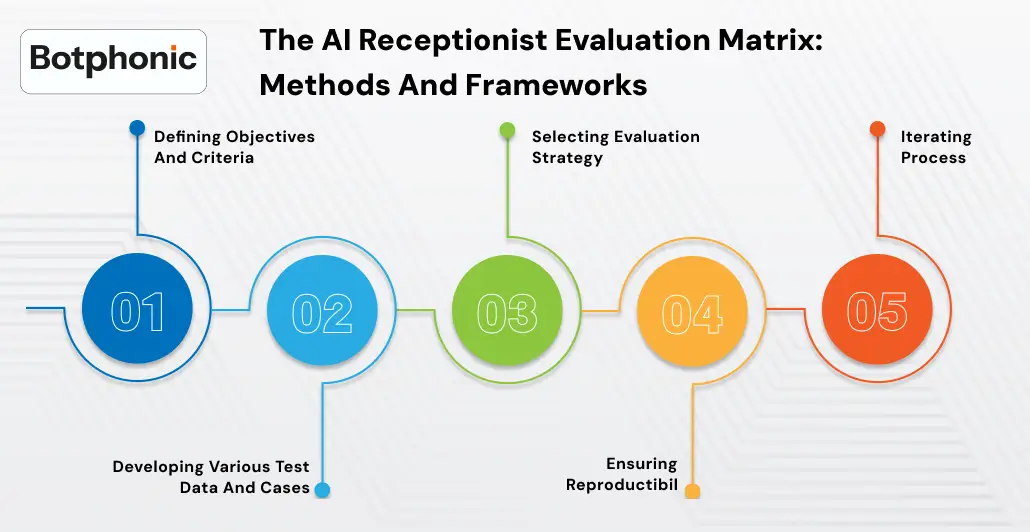

The AI receptionist evaluation matrix: methods and frameworks

You have to adopt a structured approach to ensure the effectiveness of your AI receptionist. It’s not just taking a few random tests. They are more about evaluating and building a framework to enhance its capabilities. You have to go through software testing phases for the same.

1. Defining objectives and criteria

To evaluate AI receptionist software, you must have a list of tasks ready that your AI must perform. Define its primary tasks clearly. It will help you know to what extent your AI agent is successful. Keep a list of evaluation criteria just before you start its usage. It will make the future process smoother. With these metrics you will be able to measure the success of an AI receptionist and ensure your efforts don’t go in vain.

If you want an AI agent for customer service, its primary aim must be resolving the majority of customer general queries. For this purpose the main criteria for evaluating is success rate and efficiency of conversation. Without setting this, it is nearly impossible to measure efficiency of your AI agent.

2. Developing various test data and cases

For the next step you have to figure out test data which commonly occur in the real world and as well as complex end cases. You can also develop inputs that might baffle AI agents. Developing these types of prompts for certain situations can help you understand how AI will behave in stressful situations. With this you get to know what it could do beyond its capabilities.

If you don’t have a diversified database it could raise concerns in certain situations. For instance if you have trained your AI agent with only perfectly paraphrased text and lines, it can struggle understanding the queries of real users. Similarly if you train them only on clear images it might not work with those unclear ones. Make sure to get a strong and diverse database.

3. Selecting evaluation strategy

Just like everything you get to choose from multiple ways to run an evaluation test. But if you want my opinion, a mixed approach works best.

- Set benchmarks and testing: It is one of the easiest and fastest ways to measure efficiency of your AI agent. You let your system work automatically and store the records. It could let you know about its accuracy or its response times.

- Human interference: There are certain things that only humans can do even while evaluating AI agents. Assign a human agent to judge tone, creativity or how natural a conversation feels. Also read teaming can also be an essential test for security issues

- Hybrid approaches: Don’t limit your testing to just one approach. It’s not an AI vs human game. Instead the most effective one can be combining both of them for a hybrid approach.

4. Ensuring reproductibility

Evaluating your AI agent once and then letting it stay the same can be a wrong decision. An autonomous system might face issues at any time. Hence your evaluation strategy must be reproducible. Document how your agents should be tested frequently for better flow. It will help you compare different versions of these agents allowing you to make changes whenever needed.

There are high chances that these generative AI systems might not answer in the same manner for the same input. Making it hard to maintain consistent results. Hence to avoid this you can keep record of settings, inputs and details. If AI Call Assistant changes its performance you will straightforwardly know the reason behind it. It makes the evaluation process easier.

5. Iterating process

As said evaluation is not a one time thing. It has to be continuously done:

- Conduct a testing process

- Log all the data and check results as per metrics.

- Assess the results and figure out strengths and drawbacks of your AI receptionist.

- Collect these insights and develop an improvement strategy for it.

This constant loop must keep on working to enhance the improvement of your AI agent over time.

Learn more: Best AI Receptionist Software

Conclusion

Evaluating an AI agent is a complex but a necessary task while you implement them for your business. It can guarantee efficiency, reliability and safety of your business. Listing out different AI receptionist evaluation matrices along with a robust AI evaluation strategy can be a way to optimize its performance. It is a continuous process and refining your AI on the basis of these tests can ensure agents remain effective and trustworthy for real world users.

At Botphonic our team ensures that our AI receptionist is thoroughly tested for every metric. We provide reliable, exceptional and optimally performing agents for your business.